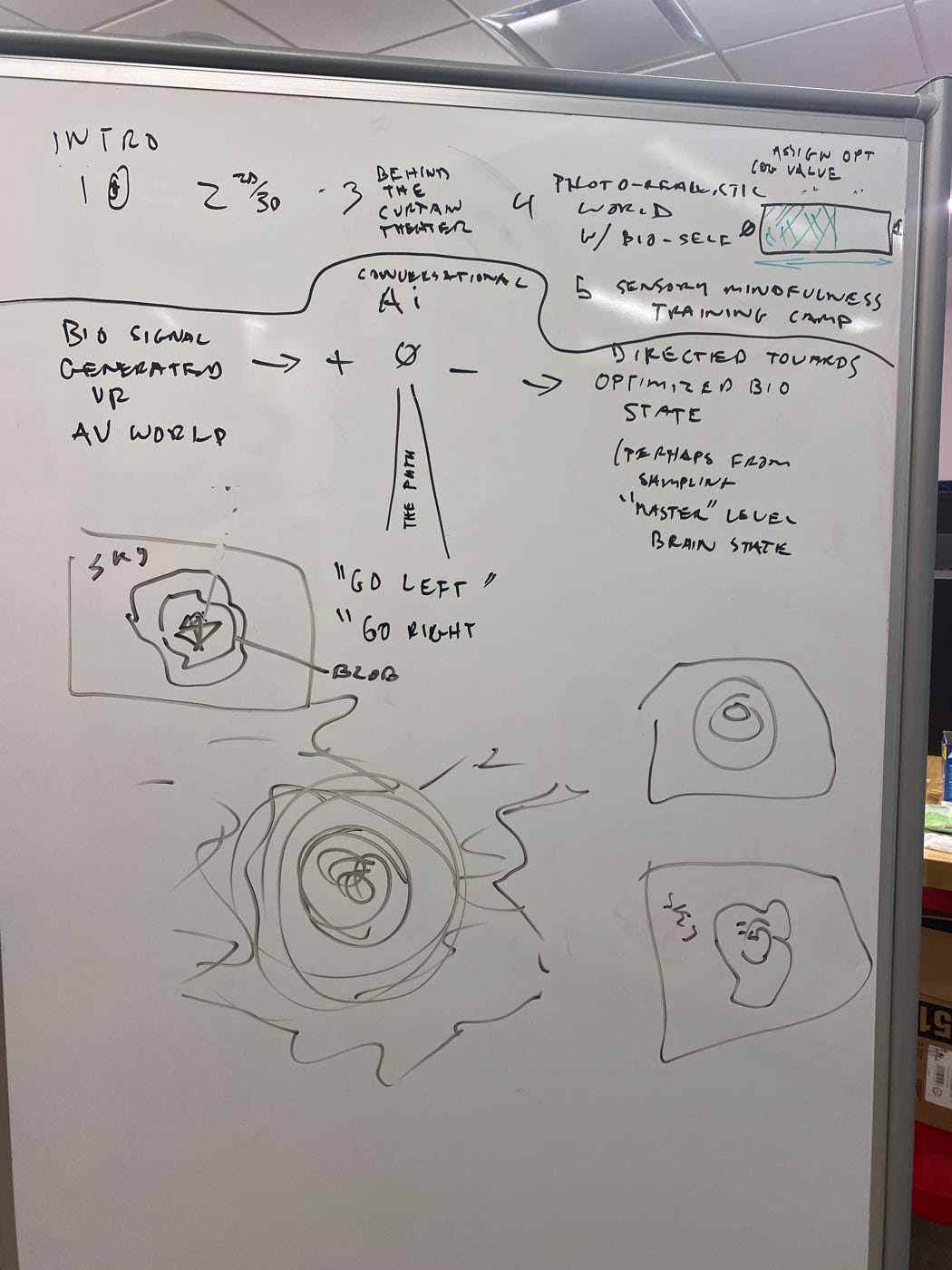

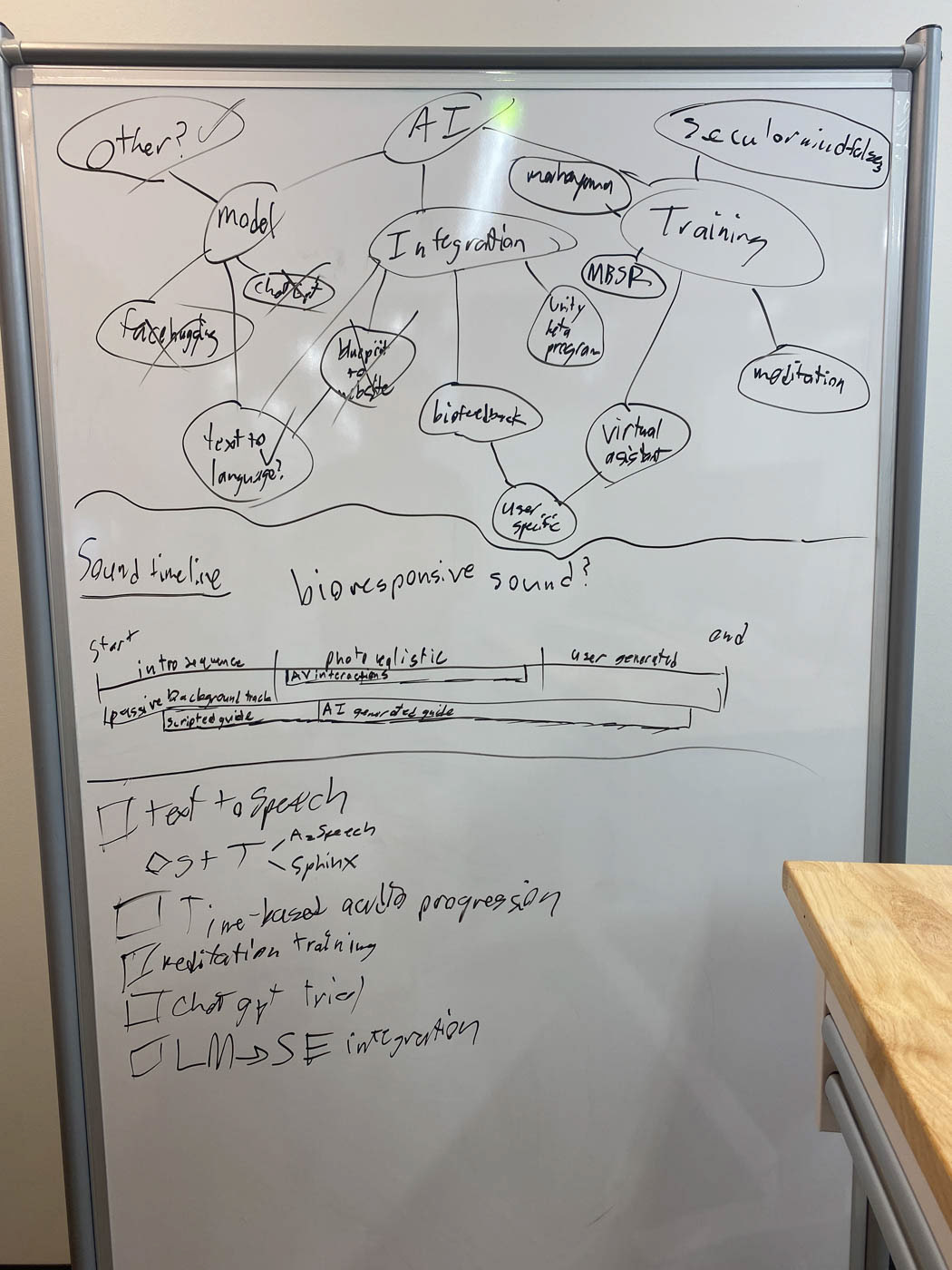

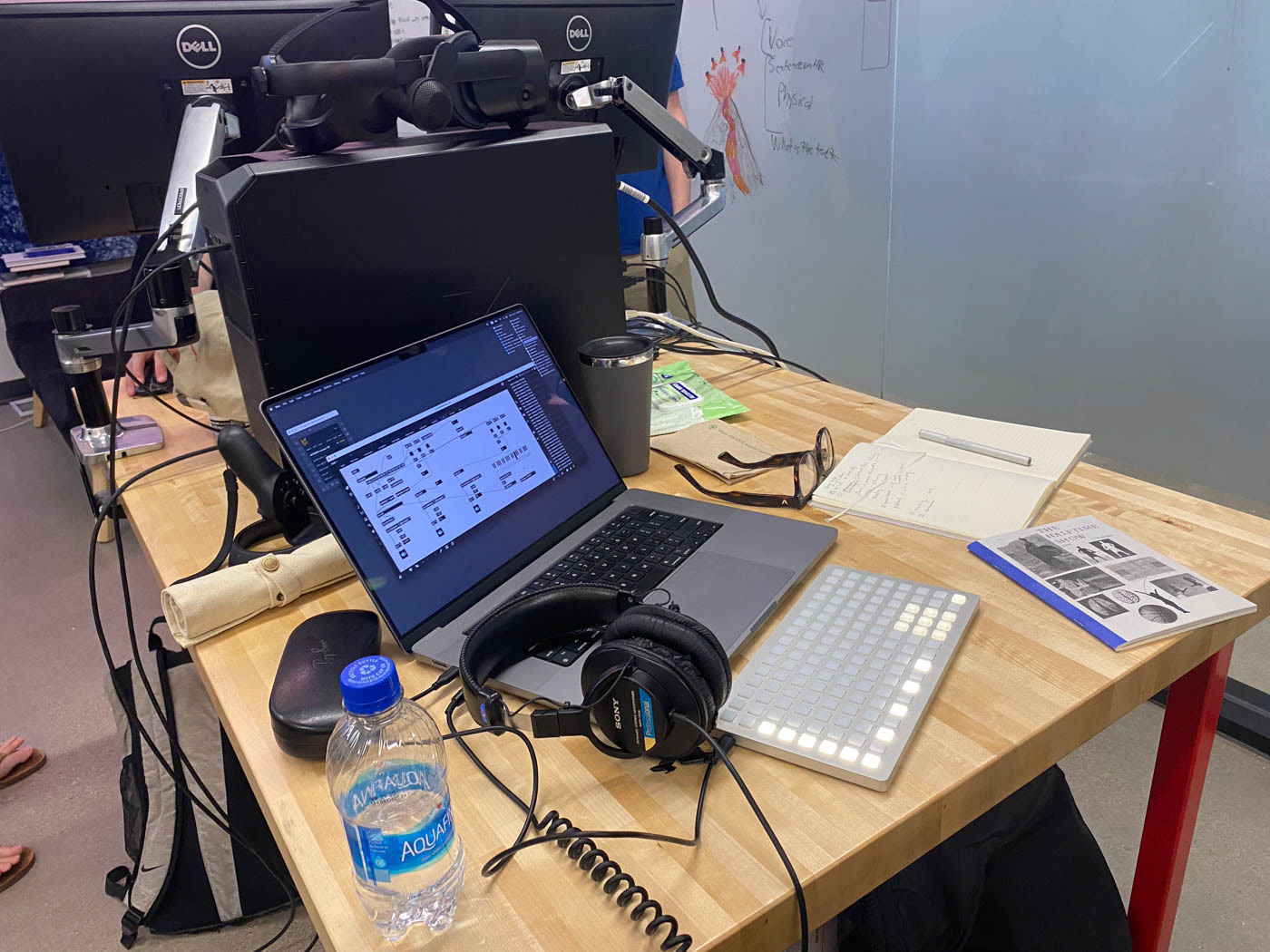

At the base level, Albert is a PC run VR application. Albert's environments and code were created inside Unreal Engine 5, and it uses many plugins to talk to various external tools and software to make a single cohesive experience.

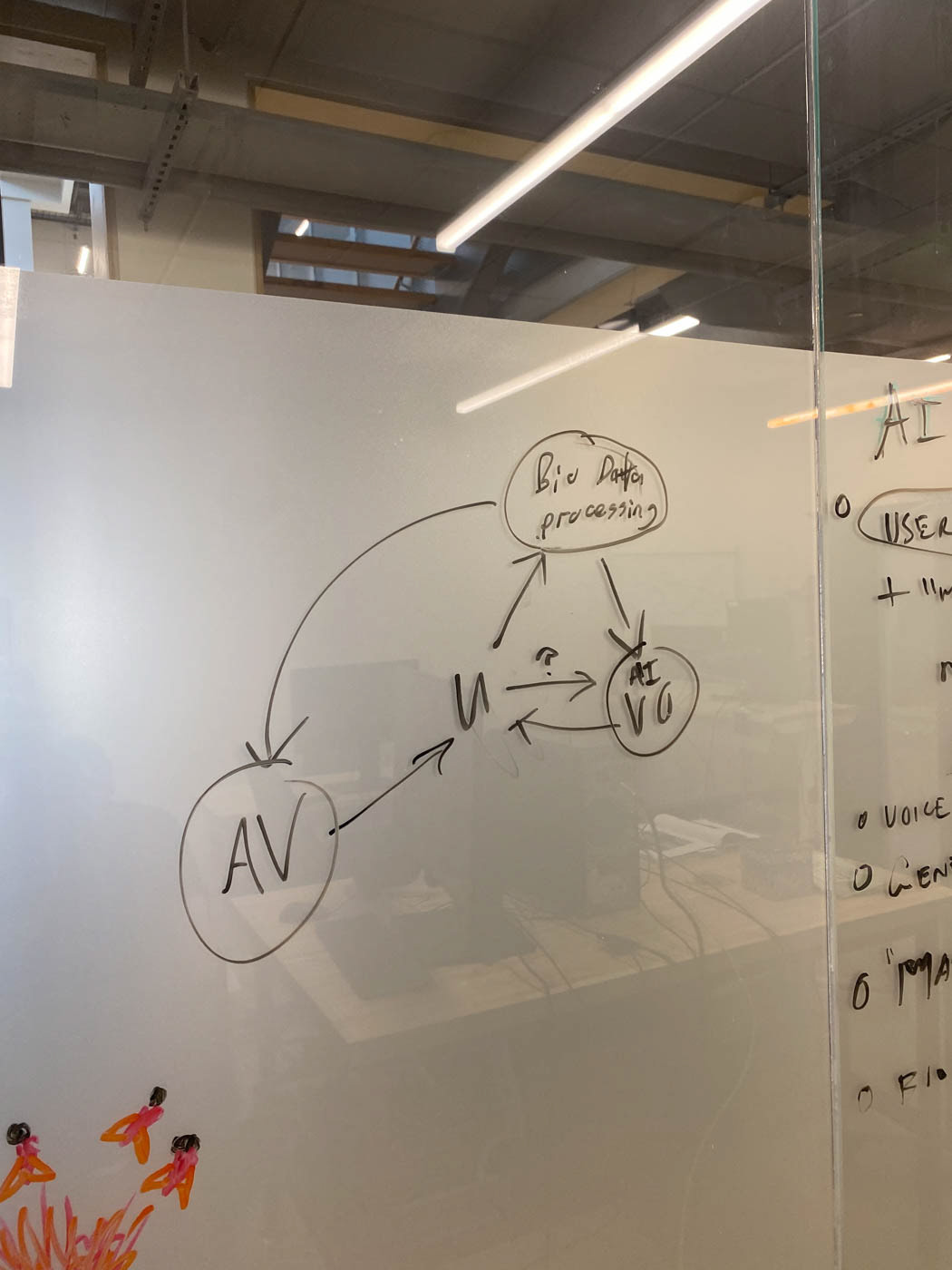

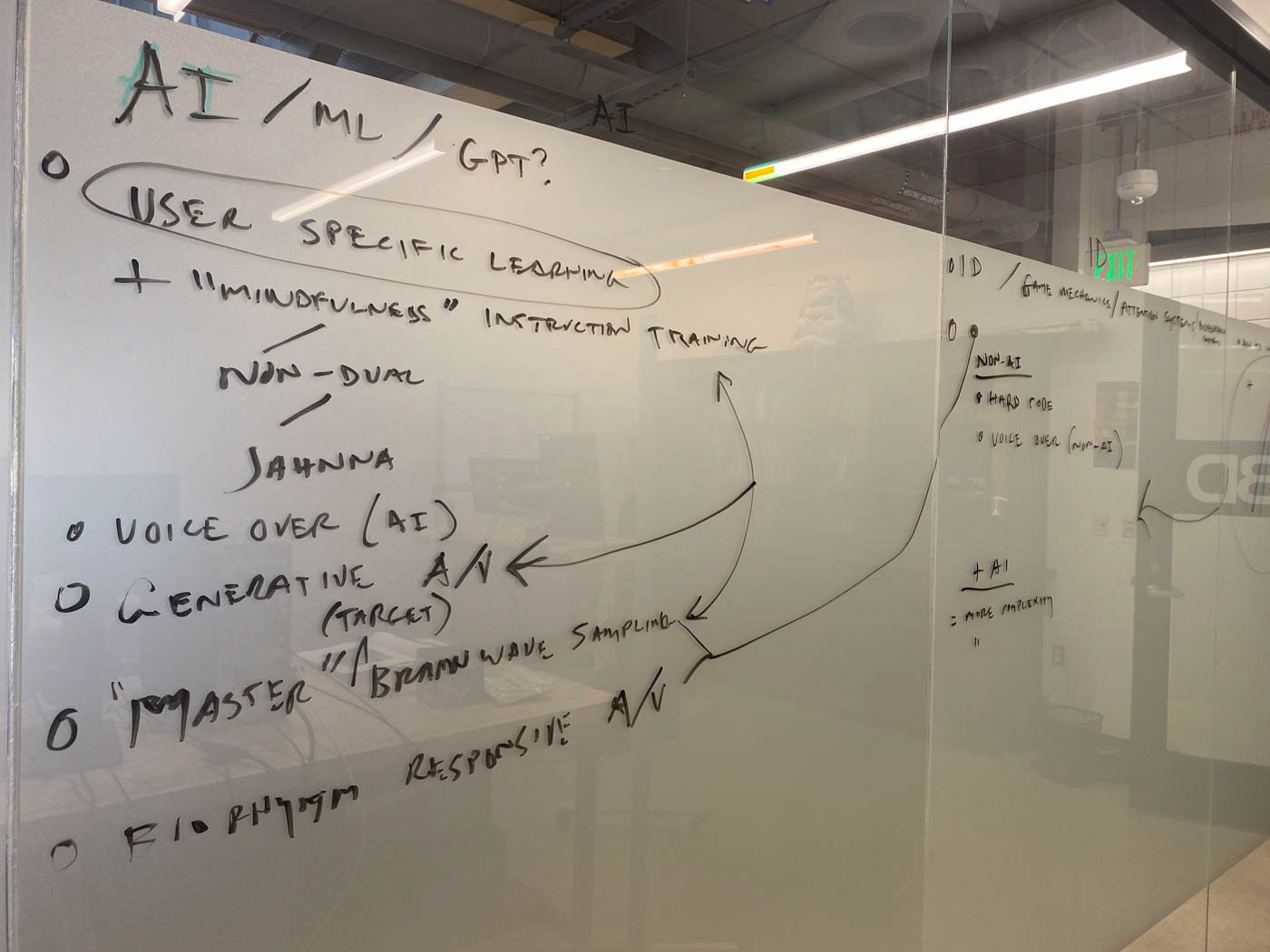

The first of the external tools that allow Albert to function is the HP Omnicept. It is a VR device that allows us to have access to certain aspects of a user's biometric data. This includes real time data such as: eye tracking, pupil dilation, heart rate, and cognitive load. This data is utilized in a few separate ways. First, Albert uses the data to effect and change the virtual world around the user in ways that mirror the physical and mental state of the user in abstract ways.

In one part of the visualization, the user's cognitive load changes the color of the sky, ranging from blue to pink. meanwhile where they are looking with their eyes causes various parts of the environment around them to react both visually and audibly, and as parts of the environment pulse and move with an abstraction of the user's heart rate.

Another part of the visualization uses a modified version of the apollonian gasket fractal to act as the set piece for the scene. In this portion we tie the user's biometric data to various variables in the fractal. Both the scale and number of iterations of the fractal are tied to cognitive load. It is also mapped to a post processing effect that shifts the position of the visuals color channels.

In addition to this, we also make use of a few different AI tools. These are used to allow the user to converse with the AI and ask for guidance about mindfulness practices. To start, we parse the user's voice input into text using Vosk, and combine it with context about what the user's biometric data is telling us and what the visualization looks like. That is then sent to ChatGPT to process and create informed guidance as to help the user in their mindfulness training. That guidance is then vocalized back to the user using Unreal's built in text to speech.